I will be at DIA 2012 this week, and hope to post about sessions and new offerings of interest as I encounter them.

There is an inherent tension in this year’s meeting theme, “Collaborate to Innovate”. Collaboration at its best exposes us to new ideas and, even more importantly, new ways of thinking. That novelty can catalyze our own thinking and bring us to flashes of insight, producing creative solutions to our most intransigent problems.

However, collaboration often requires accommodation – multiple stakeholders with their own wants and needs that must be fairly and collectively addressed. Too often, this has the unfortunate result of sacrificing creativity for the sake of finding a solution that everyone can accept. Rather than blazing a new trail (with all its attendant risks), we are forced to find an established trail wide enough to accommodate everybody. Some of the least creative work in our industry is done by joint ventures and partnered programs.

So perhaps the best way forward is: Collaborate, but not too much. We must take time to seek out and integrate new approaches, but real breakthroughs tend to require a lot of individual courage and determination.

Monday, June 25, 2012

Wednesday, June 20, 2012

Faster Trials are Better Trials

[Note: this post is an excerpt from a longer presentation I made at the DIA Clinical Data Quality Summit, April 24, 2012, entitled Delight the Sites: The Effect of Site/Sponsor Relationships on Site Performance.]

When considering clinical data collected from sites, what is the relationship between these two factors?

Obviously, this has serious implications for those of us in the business of accelerating clinical trials. If getting studies done faster comes at the expense of clinical data quality, then the value of the entire enterprise is called into question. As regulatory authorities take an increasingly skeptical attitude towards missing, inconsistent, and inaccurate data, we must strive to make data collection better, and absolutely cannot afford to risk making it worse.

As a result, we've started to look closely at a variety of data quality metrics to understand how they relate to the pace of patient recruitment. The results, while still preliminary, are encouraging.

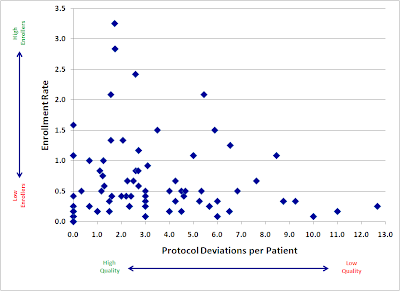

Here is a plot of a large, recently-completed trial. Each point represents an individual research site, mapped by both speed (enrollment rate) and quality (protocol deviations). If faster enrolling caused data quality problems, we would expect to see a cluster of sites in the upper right quadrant (lots of patients, lots of deviations).

Instead, we see almost the opposite. Our sites with the fastest accrual produced, in general, higher quality data. Slow sites had a large variance, with not much relation to quality: some did well, but some of the worst offenders were among the slowest enrollers.

There are probably a number of reasons for this trend. I believe the two major factors at work here are:

We will continue to explore the relationship between enrollment and various quality metrics, and I hope to be able to share more soon.

When considering clinical data collected from sites, what is the relationship between these two factors?

- Quantity: the number of patients enrolled by the site

- Quality: the rate of data issues per enrolled patient

Obviously, this has serious implications for those of us in the business of accelerating clinical trials. If getting studies done faster comes at the expense of clinical data quality, then the value of the entire enterprise is called into question. As regulatory authorities take an increasingly skeptical attitude towards missing, inconsistent, and inaccurate data, we must strive to make data collection better, and absolutely cannot afford to risk making it worse.

As a result, we've started to look closely at a variety of data quality metrics to understand how they relate to the pace of patient recruitment. The results, while still preliminary, are encouraging.

Here is a plot of a large, recently-completed trial. Each point represents an individual research site, mapped by both speed (enrollment rate) and quality (protocol deviations). If faster enrolling caused data quality problems, we would expect to see a cluster of sites in the upper right quadrant (lots of patients, lots of deviations).

Instead, we see almost the opposite. Our sites with the fastest accrual produced, in general, higher quality data. Slow sites had a large variance, with not much relation to quality: some did well, but some of the worst offenders were among the slowest enrollers.

There are probably a number of reasons for this trend. I believe the two major factors at work here are:

- Focus. Having more patients in a particular study gives sites a powerful incentive to focus more time and effort into the conduct of that study.

- Practice. We get better at most things through practice and repetition. Enrolling more patients may help our site staff develop a much greater mastery of the study protocol.

We will continue to explore the relationship between enrollment and various quality metrics, and I hope to be able to share more soon.

Labels:

data quality,

FDA,

metrics,

operations,

patient recruitment,

research sites,

statistics

Tuesday, June 19, 2012

Pfizer Shocker: Patient Recruitment is Hard

In what appears to be, oddly enough, an exclusive announcement to Pharmalot, Pfizer will be discontinuing its much-discussed “Trial in a box”—a clinical study run entirely from a patient’s home. Study drug and other supplies would be shipped directly to each patient, with consent, communication, and data collection happening entirely via the internet.

The trial piloted a number of innovations, including some novel and intriguing Patient Reported Outcome (PRO) tools. Unfortunately, most of these will likely not have been given the benefit of a full test, as the trial was killed due to low patient enrollment.

The fact that a trial designed to enroll less than 300 patients couldn’t meet its enrollment goal is sobering enough, but in this case the pain is even greater due to the fact that the study was not limited to site databases and/or catchment areas. In theory, anyone with overactive bladder in the entire United States was a potential participant.

And yet, it didn’t work. In a previous interview with Pharmalot, Pfizer’s Craig Lipset mentions a number of recruitment channels – he specifically cites Facebook, Google, Patients Like Me, and Inspire, along with other unspecified “online outreach” – that drove “thousands” of impressions and “many” registrations, but these did not amount to, apparently, even close to the required number of consented patients.

Two major questions come to mind:

1. How were patients “converted” into the study? One of the more challenging aspects of patient recruitment is often getting research sites engaged in the process. Many – perhaps most – patients are understandably on the fence about being in a trial, and the investigator and study coordinator play the single most critical role in helping each patient make their decision. You cannot simply replace their skill and experience with a website (or “multi-media informed consent module”).

2. Did they understand the patient funnel? I am puzzled by the mention of “thousands of hits” to the website. That may seem like a lot, if you’re not used to engaging patients online, but it’s actually not necessarily so.

Despite some of the claims made by patient communities, it is perfectly reasonable to expect that less than 1% of visitors (even somewhat pre-qualified visitors) will end up consenting into the study. If you’re going to rely on the internet as your sole means of recruitment, you should plan on needing closer to 100,000 visitors (and, critically: negotiate your spending accordingly).

In the prior interview, Lipset says:

This makes Pfizer’s exclusive reliance on these channels all the more puzzling. If no one is advocating disintermediating the sites and using only social media, then why was this the strategy?

I am confident that someone will try again with this type of trial in the near future. Hopefully, the Pfizer experience will spur them to invest in building a more rigorous recruitment strategy before they start.

[Update 6/20: Lipset weighed in via the comments section of the Pharmalot article above to clarify that other DTP aspects of the trial were tested and "worked VERY well". I am not sure how to evaluate that clarification, given the fact that those aspects couldn't have been tested on a very large number of patients, but it is encouraging to hear that more positive experiences may have come out of the study.]

The trial piloted a number of innovations, including some novel and intriguing Patient Reported Outcome (PRO) tools. Unfortunately, most of these will likely not have been given the benefit of a full test, as the trial was killed due to low patient enrollment.

The fact that a trial designed to enroll less than 300 patients couldn’t meet its enrollment goal is sobering enough, but in this case the pain is even greater due to the fact that the study was not limited to site databases and/or catchment areas. In theory, anyone with overactive bladder in the entire United States was a potential participant.

And yet, it didn’t work. In a previous interview with Pharmalot, Pfizer’s Craig Lipset mentions a number of recruitment channels – he specifically cites Facebook, Google, Patients Like Me, and Inspire, along with other unspecified “online outreach” – that drove “thousands” of impressions and “many” registrations, but these did not amount to, apparently, even close to the required number of consented patients.

Two major questions come to mind:

1. How were patients “converted” into the study? One of the more challenging aspects of patient recruitment is often getting research sites engaged in the process. Many – perhaps most – patients are understandably on the fence about being in a trial, and the investigator and study coordinator play the single most critical role in helping each patient make their decision. You cannot simply replace their skill and experience with a website (or “multi-media informed consent module”).

2. Did they understand the patient funnel? I am puzzled by the mention of “thousands of hits” to the website. That may seem like a lot, if you’re not used to engaging patients online, but it’s actually not necessarily so.

|

| Jakob Nielsen's famous "Lurker Funnel" seems worth mentioning here... |

In the prior interview, Lipset says:

I think some of the staunch advocates for using online and social media for recruitment are still reticent to claim silver bullet status and not use conventional channels in parallel. Even the most aggressive and bullish social media advocates, generally, still acknowledge you’re going to do this in addition to, and not instead of more conventional channels.

This makes Pfizer’s exclusive reliance on these channels all the more puzzling. If no one is advocating disintermediating the sites and using only social media, then why was this the strategy?

I am confident that someone will try again with this type of trial in the near future. Hopefully, the Pfizer experience will spur them to invest in building a more rigorous recruitment strategy before they start.

[Update 6/20: Lipset weighed in via the comments section of the Pharmalot article above to clarify that other DTP aspects of the trial were tested and "worked VERY well". I am not sure how to evaluate that clarification, given the fact that those aspects couldn't have been tested on a very large number of patients, but it is encouraging to hear that more positive experiences may have come out of the study.]

Subscribe to:

Posts (Atom)